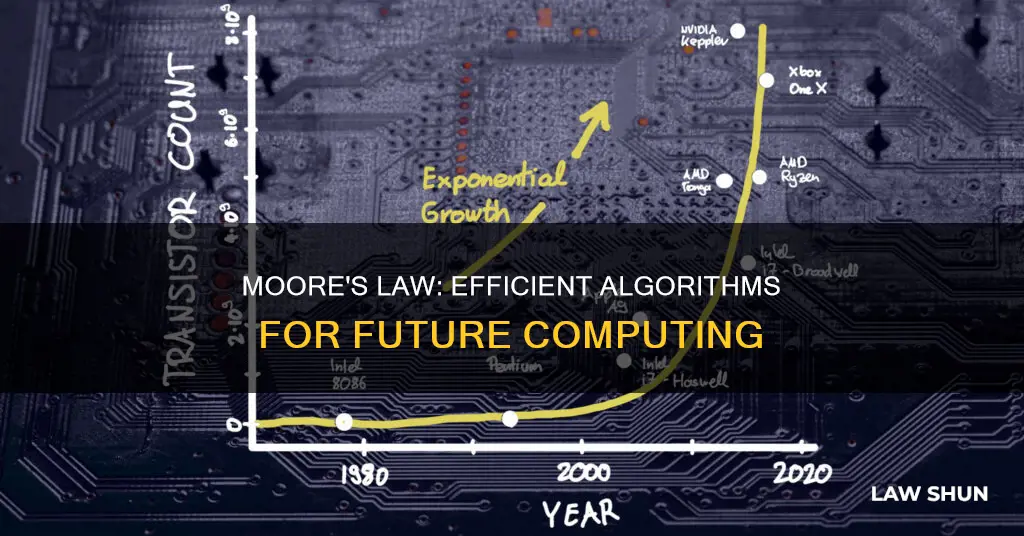

Moore's Law, an empirical relationship named after Intel co-founder Gordon Moore, states that the number of transistors in an integrated circuit (IC) doubles about every two years. This prediction has held since 1975 and has been a driving force of technological and social change, influencing the fields of computing, transportation, healthcare, education, and energy production. While Moore's Law is reaching its physical limitations, the evolution of computer hardware and algorithmic efficiency has led to exponential growth in computing power. Algorithmic improvements, such as those in linear algebra, enable more efficient use of resources, allowing computers to perform tasks faster and cheaper. The combination of hardware advancements and algorithmic innovations will continue to drive progress in computer science and various industries.

| Characteristics | Values |

|---|---|

| Transistor count on a microprocessor doubles | Every two years |

| Algorithmic improvements | Make more efficient use of existing resources |

| Algorithmic commons | Helps all programmers produce better software |

| Algorithmic improvements | Allow computers to do a task faster, cheaper, or both |

What You'll Learn

- The efficiency of algorithms is improved by optimising discretization methods

- Linear algebra is a major workhorse for simulations

- Algorithmic improvements can be more impactful than hardware improvements

- Moore's Law is waning, but computing technology will continue to improve

- Applied mathematics and computational algorithms will continue to be foundational for innovation

The efficiency of algorithms is improved by optimising discretization methods

Moore's Law, an observation made by Gordon Moore in 1965, states that the number of transistors on microchips doubles roughly every two years, implying that computational progress will become significantly faster, smaller, and more efficient over time. This has had a direct influence on the progress of computing power by creating a goal for chip makers to achieve.

Discretization methods fall into two distinct categories: unsupervised, which do not use any information in the target variable (e.g., disease state), and supervised methods, which do. Supervised discretization is more beneficial to classification than unsupervised discretization as it can be used to select variables that have little or no correlation with the target variable, effectively removing them as inputs to the classification algorithm.

Optimising discretization methods can, therefore, improve the efficiency of algorithms by reducing the complexity of data and improving the efficiency of data mining and machine learning. It can also ensure that the intrinsic correlation structure of the original data is kept, even when the geometric topology of the data is easily distorted when mapping data that presents an uneven distribution in high-dimensional space.

PA Withholding Law: How Does It Affect Corporations?

You may want to see also

Linear algebra is a major workhorse for simulations

Moore's Law, an observation made by Gordon Moore in 1965, states that the number of transistors on a microchip doubles about every two years with minimal cost increase. This prediction has held since 1975 and has been a driving force in technological and social change, as well as economic growth.

As transistors in integrated circuits become smaller, computers become faster and more efficient. Linear algebra is a major workhorse for simulations, and the improvements in computer processing power due to Moore's Law have greatly benefited this field.

Linear algebra is a branch of mathematics that deals with linear equations and transformations. It involves the manipulation and analysis of vectors and matrices, which are fundamental tools for representing and solving systems of linear equations. Linear algebra is widely used in simulations across various fields, including physics, engineering, computer graphics, and data science.

Simulations often involve complex mathematical models that describe the behaviour of a system over time. These models typically consist of a set of equations that represent the relationships between different variables in the system. By using linear algebra, these equations can be solved efficiently, even for large and complex systems.

For example, in physics and engineering, simulations might involve modelling the motion of objects in space or the behaviour of structures under different loads. Linear algebra allows for the efficient calculation of object trajectories, the application of forces and transformations, and the solution of systems of equations describing the behaviour of structures.

In computer graphics, linear algebra is essential for tasks such as 3D rendering and animation. Matrices are used to represent transformations in 3D space, such as rotations, translations, and scaling. By manipulating these matrices using linear algebra, objects can be transformed and displayed on a 2D screen.

Additionally, linear algebra is a powerful tool for data analysis and machine learning. It provides techniques for dimensionality reduction, data compression, and pattern recognition. For example, principal component analysis (PCA) is a linear algebra technique that allows for the identification of the most important features in a dataset, enabling more efficient processing and modelling.

The increased processing power and reduced costs brought about by Moore's Law have made it possible to run more complex simulations and process larger datasets. This has enabled advancements in various fields that rely on simulations, such as scientific research, product development, and decision-making in industries like finance and healthcare.

While Moore's Law has had a significant impact, it is important to note that it is not an actual law of physics but rather an empirical relationship. Some believe that Moore's Law will reach its physical limits in the 2020s due to increasing costs and challenges in cooling a large number of components in a small space. However, alternative approaches, such as cloud computing and quantum computing, may help overcome these limitations and continue the trend of improving computational efficiency.

Mendel's Law of Genetics: Corn Conundrum

You may want to see also

Algorithmic improvements can be more impactful than hardware improvements

Moore's Law, formulated by Intel co-founder Gordon Moore in 1965, states that the number of transistors on a microprocessor doubles about every two years. This prediction has held true since 1975 and has been a driving force of technological and social change, influencing the fields of computing, transportation, healthcare, education, and energy production.

While Moore's Law has been significant, the evolution in computer hardware alone would not have yielded the remarkable simulation capabilities we witness today. Algorithmic improvements have been a critical, yet lesser-known, contributor to advancements in technology.

A new algorithm running on an older computer can produce a solution significantly faster than an older algorithm running on a newer computer. Engineers can now simulate more intricate geometries, fluid dynamics, and complex physical interactions. Complex simulations that once took days or weeks can now be completed in a matter of hours or minutes, allowing for broader design exploration and quicker, more informed decision-making.

- Optimal Discretization of Physics Equations: Discretization is the process of converting continuous functions, such as velocity as a function of time and space, into a finite number of variables in discrete locations. Choosing the right discretization method is crucial for achieving the desired accuracy and minimizing computation time. Advanced algorithms provide valuable options, such as maximizing predictive accuracy with a given number of discretization points or minimizing the number of points while maintaining the required accuracy.

- Linear Algebra: Solving systems of linear equations is fundamental to most simulation tools and can be computationally demanding. Multigrid methods have been developed to exploit the hierarchical structure of many systems, allowing for more efficient solutions. For example, when reducing the discretization length by a factor of 2 in a 3D physics problem, using the classic Gauss algorithm would result in a computation time 512 times longer. In contrast, multigrid methods would only increase the computation time by a factor of 8, demonstrating a significant improvement in efficiency.

In conclusion, while Moore's Law has enabled great progress in the semiconductor industry, algorithmic improvements have often been more impactful in driving computer science forward. These improvements have led to more efficient utilization of resources, enabling computers to perform tasks faster and cheaper. The development of efficient algorithms will continue to be a key driver of innovation in the coming decades.

Social Host Laws: Underage Drinking and Parental Liability

You may want to see also

Moore's Law is waning, but computing technology will continue to improve

Moore's Law, an observation made by Intel co-founder Gordon Moore in 1965, states that the number of transistors on a microchip doubles about every two years with minimal cost increase. This prediction became known as Moore's Law and has been a driving force of technological and social change, influencing computers and machines that run on computers to become smaller, faster, and cheaper over time.

However, Moore's Law is facing challenges due to physical limitations in transistor size and increasing costs for chip manufacturers. These factors have led some to believe that Moore's Law will reach its natural end in the 2020s. Despite this, computing technology will continue to improve through innovations in 3D chip technology, DNA computing, and quantum computing.

The physical limitations of Moore's Law are primarily due to the size of atoms, which sets a fundamental barrier to how small transistors can be. As transistors reach the limits of miniaturization, chip manufacturers face difficulties in cooling an increasing number of components in a small space. Additionally, the cost of research and development, manufacturing, and testing has been steadily increasing with each new generation of chips.

To address these challenges, chip manufacturers are exploring new approaches such as 3D chip stacking, where chips are stacked vertically to increase transistor density and improve speed and power efficiency. Another innovative approach is DNA computing, which utilizes DNA to perform calculations and has the potential to be more powerful than today's supercomputers.

Quantum computing is another disruptive technology that could surpass the limitations of Moore's Law. In quantum computing, the basic unit of computation is the quantum bit or qubit, which doubles the computing power with each additional qubit. This exponential growth in computing power could lead to unprecedented advancements in various fields.

While Moore's Law may be waning, the pursuit of innovation in computing technology remains strong. Through advancements in chip design, materials science, and alternative computing paradigms, the future of computing holds immense potential for continued progress and improved efficiency.

Labor Laws: Do Your Children Count as Employees?

You may want to see also

Applied mathematics and computational algorithms will continue to be foundational for innovation

Moore's Law, an empirical relationship named after Intel co-founder Gordon Moore, states that the number of transistors in an integrated circuit (IC) doubles about every two years. This prediction has held since 1975 and has been foundational to the semiconductor industry, guiding long-term planning and research and development targets.

While Moore's Law has been consequential, the evolution of computer hardware alone would not have delivered the simulation capabilities we now have. A lesser-known contributor has been the improvement in algorithmic efficiency. Our ability to make algorithms faster and more capable is critical for simulation.

Algorithms in 2023 are far more efficient and rapid than before. A new algorithm running on a 20-year-old computer will generate a solution in significantly less time than a 20-year-old algorithm running on a new computer. Engineers can simulate more detailed geometries, intricate fluid dynamics, and complex physical interactions.

There are two areas that underpin most simulation tools where algorithms are improving: optimal discretization of physics equations and linear algebra. Choosing an effective discretization method is crucial as it directly influences prediction accuracy and computation time. In the past, choosing the best method was a task best left to experts. Today, we have algorithms that provide us with valuable options.

Solving a system of linear equations is at the core of nearly every simulation tool. Gauss elimination from the 18th century is the most basic algorithm for this purpose, but it is extremely demanding computationally. In recent decades, mathematical researchers have continuously reduced the number of computations by exploiting the structure of the underlying systems. Today, we use multigrid methods to exploit the hierarchical structure of many systems. This is how we can solve systems of linear algebra with linear complexity.

In the future, highly parallel multigrid algorithms specifically tuned for computer architectures such as Graphic Processing Units (GPUs) will be the major workhorses of any 3D multi-physics simulations. This shift towards parallel computing architectures is one of the many "More-than-Moore" technologies that will drive the exponential growth of computing power.

Moore's Law is reaching its limitations. However, it is clear that computer technology will continue to improve due to new technologies in development or existing technologies getting better. I am convinced that applied mathematics and computational algorithms will continue to be the foundation of innovation for the next 50-75 years. The combination of machine learning and classical simulation technology, for example, is creating completely new opportunities.

Florida Laws: International Waters and Their Legal Boundaries

You may want to see also

Frequently asked questions

Moore's Law states that the number of transistors on a microchip doubles about every two years with a minimal cost increase.

Moore's Law has been a driving force in the semiconductor industry, influencing the progress of computing power and guiding long-term planning and research. This has led to advancements in digital electronics, such as increased memory capacity and improved sensors, which have improved the efficiency of algorithms.

Algorithmic improvements make more efficient use of existing resources and allow computers to perform tasks faster and cheaper. For example, the MP3 format made music storage and transfer easier due to compression algorithms.

Moore's Law is reaching its limitations due to the physical constraints of transistor size and semiconductor manufacturing capabilities. However, new technologies such as parallel computing architectures and quantum computing are expected to drive continued growth in computing power.