Beer's Law is a limiting law that is valid only for low concentrations of analyte. It states that concentration and absorbance are directly proportional to each other. However, there are several reasons why Beer's Law may not apply.

Firstly, at higher concentrations, the individual particles of analyte are no longer independent of each other, and their proximity may change the analyte's absorptivity. Secondly, an analyte's absorptivity depends on the solution's refractive index, which varies with the analyte's concentration. Thirdly, Beer's Law assumes that the molecules absorbing radiation do not interact with each other, but at higher concentrations, the sample molecules are more likely to interact, altering their ability to absorb radiation.

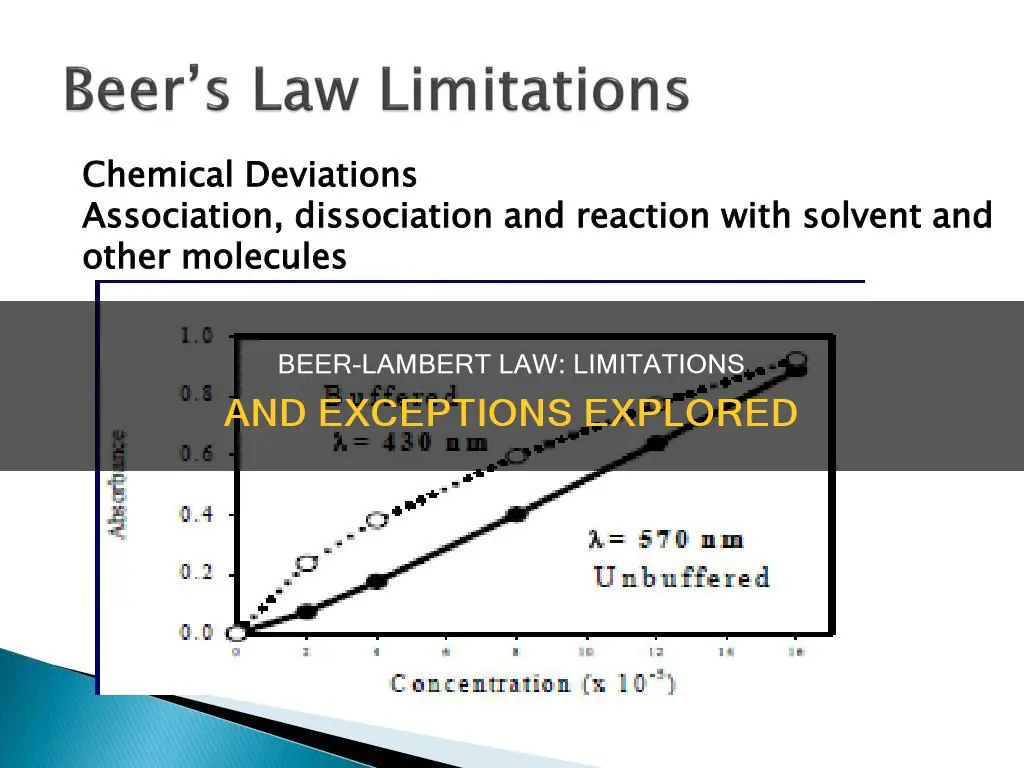

Additionally, Beer's Law assumes purely monochromatic radiation, but it is not possible to achieve purely monochromatic radiation using a dispersing element with a slit. The net effect is that the total absorbance added over all the different wavelengths is no longer linear with concentration, resulting in a negative deviation at higher concentrations.

Furthermore, Beer's Law assumes that there is no scattering of the light beam and that there are no stray radiation effects. Finally, the law may not apply when different types of molecules are in equilibrium with each other, when an association complex is formed by the solute and the solvent, when fluorescent compounds are used, or when thermal equilibrium is attained between the excited state and the ground state.

| Characteristics | Values |

|---|---|

| Concentration | Beer's Law is valid for low concentrations of analyte. The law is limited to 10mM, with the internet consensus defining "high concentration" as >0.01M. |

| Analyte Interaction | At higher concentrations, the individual particles of analyte are no longer independent of each other and may interact, changing the analyte's absorptivity. |

| Solution's Refractive Index | A solution's refractive index varies with the analyte's concentration, and so values of a and ε may change. |

| Equilibrium Reaction | If the analyte is involved in an equilibrium reaction, this may cause a chemical deviation from Beer's Law. |

| Monochromatic Radiation | Beer's Law assumes purely monochromatic radiation, but in reality, even the best wavelength selector passes radiation with a small but finite effective bandwidth. |

| Stray Radiation | Stray radiation arises from imperfections in the wavelength selector. At higher concentrations, this results in a negative deviation from Beer's Law. |

What You'll Learn

- Beer's Law is not applicable at high concentrations because of the proximity of molecules, which causes deviations in absorptivity

- The law also fails at high concentrations because the refractive index changes

- Beer's Law is not applicable at low concentrations because the amount of radiation absorbed is too small to be detected

- The law does not apply when the analyte molecules interact with each other

- Beer's Law is not applicable when the light used is not purely monochromatic

Beer's Law is not applicable at high concentrations because of the proximity of molecules, which causes deviations in absorptivity

Beer's Law, also known as the Beer-Lambert Law, states that the concentration and absorbance of a given material sample are directly proportional. In other words, a high-concentration solution absorbs more light, while a low-concentration solution absorbs less light.

However, Beer's Law is not applicable at high concentrations due to the proximity of molecules, which causes deviations in absorptivity. This is because the law assumes that the molecules of the solution are independent of each other and do not interact. At higher concentrations, the molecules are more likely to interact with each other, altering their ability to absorb radiation. This interaction affects the analyte's absorptivity, causing a negative deviation from Beer's Law.

Additionally, the refractive index of a solution changes with the concentration of the analyte, which can further impact the absorptivity values. Beer's Law is a limiting law that is valid only for low concentrations, as the linearity of the law is limited by chemical and instrumental factors.

Other factors that can cause deviations from Beer's Law include the presence of stray radiation, the use of polychromatic radiation instead of purely monochromatic radiation, and chemical equilibrium reactions involving the analyte.

McGill Law: Application Essentials for Aspiring Students

You may want to see also

The law also fails at high concentrations because the refractive index changes

Beer's Law is a limiting law that is valid only for low concentrations of analyte. At higher concentrations, the individual particles of analyte are no longer independent of each other, and the resulting interaction between them may change the analyte's absorptivity.

Additionally, an analyte's absorptivity depends on the solution's refractive index, which varies with the analyte's concentration. At higher concentrations, the refractive index changes, and values of 'a' (absorptivity) and 'ε' (molar absorptivity) may change. For sufficiently low concentrations of analyte, the refractive index remains essentially constant, and a Beer's Law plot is linear.

The law also fails at high concentrations because the proximity between the molecules of the solution is so close that there are deviations in the absorptivity.

Maryland Law: Stone Fabricators' Rights and Responsibilities

You may want to see also

Beer's Law is not applicable at low concentrations because the amount of radiation absorbed is too small to be detected

Beer's Law, a fundamental principle in analytical chemistry, relates the absorption of light to the properties of a substance and the path length of the light through that substance. While this law is widely applied in quantitative analysis, there are certain limitations to its applicability, especially at low concentrations.

One of the primary reasons why Beer's Law may not hold true at low concentrations is due to the minimal amount of radiation or light absorbed. At very low concentrations, the substance of interest may absorb an insufficient amount of radiation, making it challenging for instruments to detect and measure this absorption accurately. This limitation is inherent to the linear relationship described by Beer's Law, where absorbance is directly proportional to concentration. When the concentration is extremely low, the resulting absorbance may fall below the detection limit of the instrument, leading to imprecise or even undetectable readings.

The detection limit of an instrument refers to the lowest concentration of a substance that can be reliably detected and quantified. It is influenced by factors such as the sensitivity of the instrument, the wavelength of light used, and the presence of interfering substances. If the concentration of the analyte is below this detection limit, the resulting absorbance values may be too small to be distinguishable from random noise or background signals.

To address this limitation, analysts may employ more sensitive instruments capable of detecting lower levels of absorption. Advanced techniques, such as using a longer path length or concentrating the sample, can also enhance the detectability of low-concentration analytes. However, it is important to recognize that these modifications may introduce other sources of error or deviate from the ideal conditions assumed by Beer's Law, requiring careful calibration and validation of the analytical method.

In summary, Beer's Law may not be applicable at low concentrations due to the minute amount of radiation absorbed, which can fall below the detection capabilities of standard instruments. To work around this limitation, more sensitive equipment or specialized techniques are employed to enhance the detectability of the analyte. Nevertheless, analysts must remain vigilant about potential sources of error and carefully validate their methods to ensure accurate and reliable results when dealing with low-concentration solutions.

Applying for a VA Law License After Revocation

You may want to see also

The law does not apply when the analyte molecules interact with each other

Beer's Law, also known as the Beer-Lambert law or the Beer-Lambert-Bouguer law, is a fundamental concept in analytical chemistry and molecular spectroscopy. It relates the attenuation of light as it passes through a substance (in other words, the decrease in power or intensity of the light) to the properties of the substance.

The law states that the attenuation of light is directly proportional to the substance's thickness and its concentration. In mathematical terms, this can be expressed as:

\[A = \varepsilon b C\]

Where:

- \(A\) is the absorbance of the substance,

- \(\varepsilon\) is the molar absorptivity or extinction coefficient,

- \(b\) is the path length, and

- \(C\) is the concentration.

However, there are situations where Beer's Law does not hold true. One such scenario is when the analyte molecules (the molecules of the substance being analysed) interact with each other. This assumption of non-interaction is valid only at low concentrations. As the concentration increases, the analyte molecules may start to influence each other's behaviour, altering their ability to absorb radiation.

At higher concentrations, the individual analyte molecules are no longer independent of each other. Their interaction can change the analyte's absorptivity. This effect leads to a negative deviation from Beer's Law, meaning the measured absorbance is lower than what the law predicts.

Additionally, the analyte's absorptivity depends on the solution's refractive index, which in turn varies with the analyte's concentration. As a result, the values of \(\varepsilon\) and \(a\) (the absorptivity with units of cm^-1 conc^-1) may change, further affecting the accuracy of Beer's Law predictions.

To summarise, Beer's Law is a useful tool for quantitative analysis, but it has limitations. When analyte molecules interact with each other at higher concentrations, their ability to absorb radiation changes, leading to a deviation from the linear relationship predicted by Beer's Law. This highlights the importance of understanding the underlying assumptions and limitations of any scientific law or model when applying it in practice.

Minority Job Applicants: Understanding Legal Protections and Rights

You may want to see also

Beer's Law is not applicable when the light used is not purely monochromatic

Beer's Law, also known as the Beer-Lambert Law, relates the attenuation of light to the properties of the material through which the light is travelling. The law assumes that the molecules absorbing radiation do not interact with each other and that the radiation reaching the sample is of a single wavelength. However, it is not possible to obtain purely monochromatic radiation using a dispersing element with a slit, and this can affect the accuracy of the law.

Spectroscopic instruments typically use a device called a monochromator to disperse radiation into distinct wavelengths. A slit in the monochromator blocks the wavelengths that are not required and allows only the desired wavelength (usually λmax) to pass through to the sample. However, in reality, the slit has to allow a "packet" of wavelengths through to the sample, not just a single wavelength. This packet is centred on λmax but also includes nearby wavelengths. The width of the slit determines the number of wavelengths that pass through, with narrower slits allowing for more monochromatic radiation.

While narrower slits lead to more monochromatic radiation and less deviation from Beer's Law, they also have disadvantages. Reducing the slit width lowers the power of radiation (Po) reaching the sample and the detector. As Po and P (the radiation reaching the detector) become smaller, the background noise becomes a more significant contribution to the overall measurement. This restricts the signal that can be measured and the detection limit of the spectrophotometer. Therefore, selecting the appropriate slit width involves balancing the desire for high source power with the desire for high monochromaticity.

Additionally, the sample usually has a slightly different molar absorptivity for each wavelength of radiation shining on it. As a result, the total absorbance added over all the different wavelengths is no longer linear with concentration. Instead, a negative deviation occurs at higher concentrations due to the polychromicity of the radiation. The deviation is more pronounced the greater the difference in molar absorptivity. Therefore, it is preferable to perform absorbance measurements in a region of the spectrum that is relatively broad and flat to minimise deviations from Beer's Law.

Gas Laws: Everyday Applications and Their Importance

You may want to see also

Frequently asked questions

Beer's Law is not applicable to diluted solutions because the amount of radiation absorbed will be too small to detect.

Beer's Law is not applicable to solutions with high concentrations of a particular metallic solution because the absorbance will be higher than 1, meaning that all the light is absorbed.

Beer's Law assumes that the incident radiation is purely monochromatic, but in reality, even the best wavelength selector passes radiation with a small but finite effective bandwidth. This will always give a negative deviation from Beer's Law.

Beer's Law assumes that the molecules of the solute are not interacting with each other. However, at high concentrations, the molecules are more likely to interact, which can alter their ability to absorb radiation and lead to a negative deviation from Beer's Law.